这个故事是十大网博靠谱平台一个人工智能驱动的顾问聊天机器人的,我是基于它工作的 LangChain and Chainlit. 这个机器人向潜在客户询问他们在企业数据空间中的问题, 开发动态调查问卷,以便更好地了解问题. 在收集了用户问题的足够信息后,它给出了解决问题的建议. Whilst formulating questions it also tries to check if the user is confused and needs some questions answered. 如果是这种情况,它会尝试回复它.

聊天机器人是围绕与人工智能治理相关主题的知识库构建的, security, data quality, etc. 但你也可以选择其他话题.

该知识库存储在矢量数据库(FAISS),并在每个步骤中用于提出问题或提供建议.

然而,这个聊天机器人可以基于任何知识库,并在不同的环境中使用. 所以你可以把它作为其他咨询聊天机器人的蓝图.

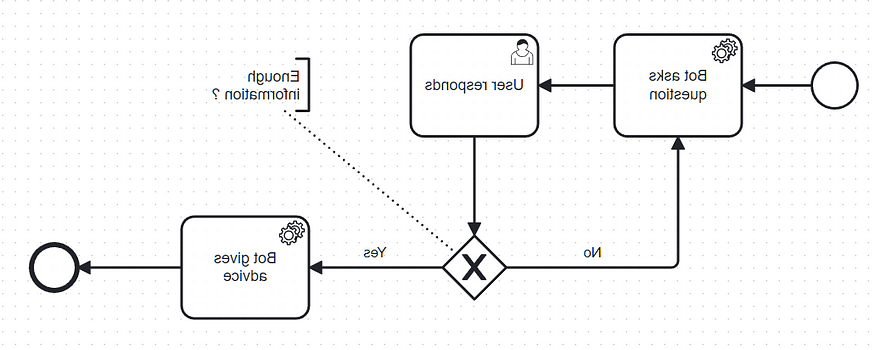

Interaction Flow

The normal flow of a chatbot is simple: the user asks a question and the bot answers it and so on. 机器人通常会记住之前的互动,所以有一个历史记录.

然而,这个机器人的交互是不同的. It looks like this:

AI driven consultant chatbot interaction

In this case the chatbot asks a question, 用户回答并重复这个交互几次. If the accumulated knowledge is good enough for a response or the number of questions reaches a certain threshold, a response is given, otherwise another question is asked.

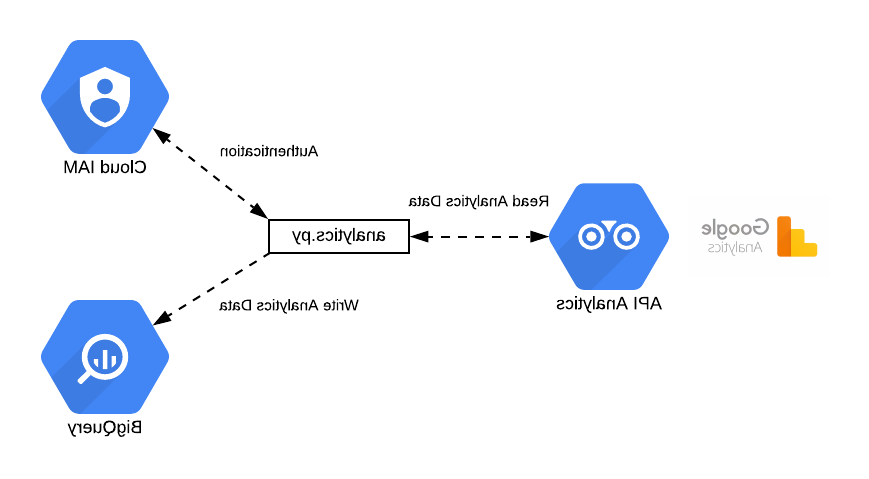

Rough Architecture

以下是该应用程序的参与者:

主要参与者是人工智能驱动的顾问聊天机器人

We have 4 participants:

The user

The application which orchestrates the workflow between ChatGPT, the knowledge base and the user.

ChatGPT 4 (gpt-4–0613)

The knowledge base (a vector database using FAISS)

We have tried ChatGPT 3.但结果不是很好,很难产生有意义的问题. ChatGPT 4 (gpt-4–0613) seemed to produce much better questions and advices and be more stable too.

我们还试验了最新的ChatGPT 4模型(gpt-4 - 1106预览版), GPT 4 Turbo), 但是我们经常从OpenAI函数调用中遇到意想不到的结果. 所以我们经常会看到这样的错误日志:

File "pydantic/main.py", line 341, in pydantic.main.BaseModel.__init__

pydantic.error_wrappers.ValidationError:响应标签的2个验证错误

extracted_questions

field required (type=value_error.missing)

questions_related_to_data_analytics

field required (type=value_error.missing)

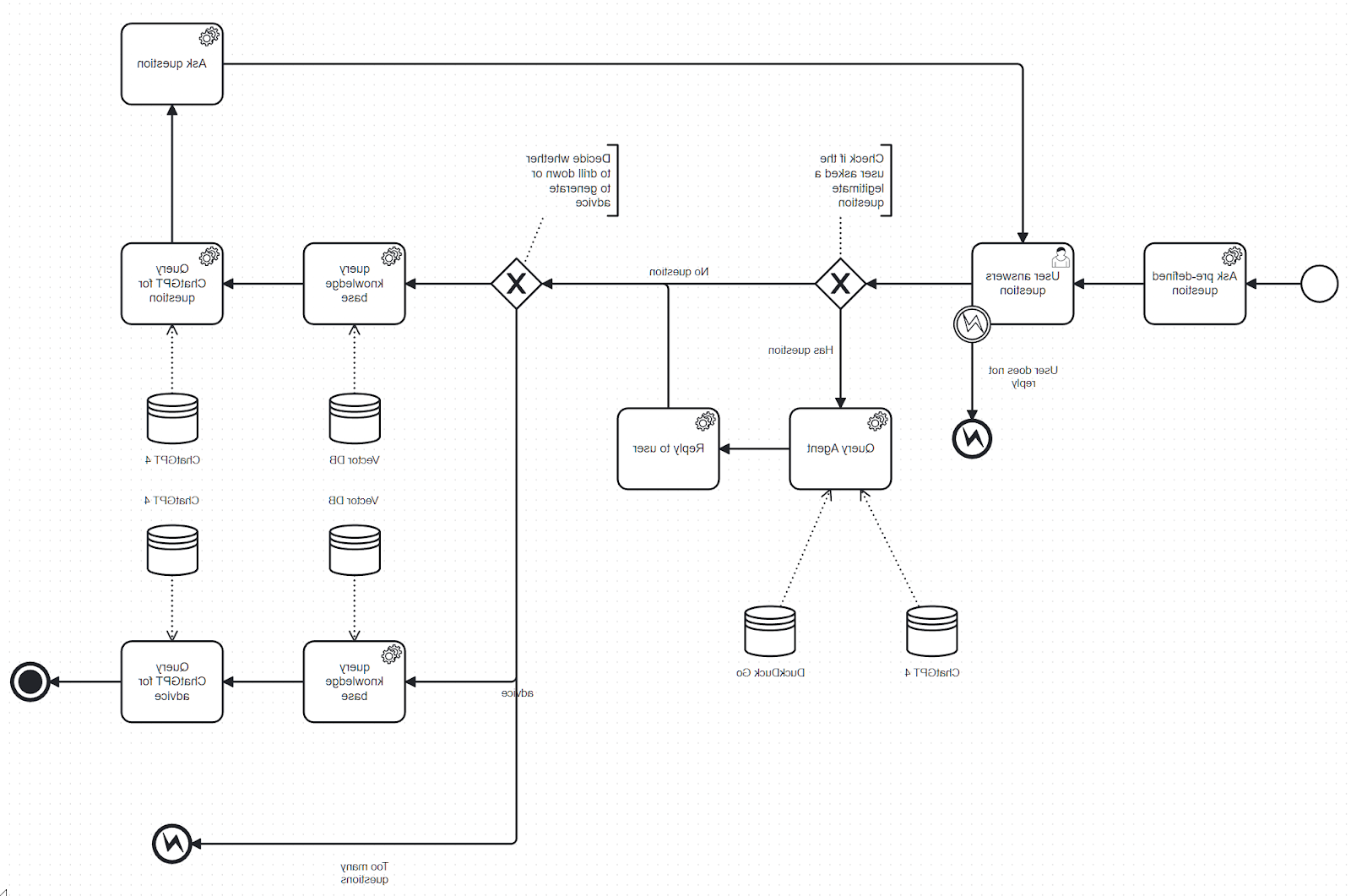

The Workflow — How it works

下图显示了该工具的内部工作原理:

Chatbot workflow

These are the steps of the workflow:

该工具向用户询问一个预定义的问题. This is typically: “

在你的数据生态系统中,你最关心的是哪个领域?”The user replies to the initial question

聊天机器人检查用户的回复是否包含一个合法的问题.e. a question that is not off topic)

-如果是,则启动一个简单的查询代理来澄清问题. 这个简单的代理使用ChatGPT 4和DuckDuckGo搜索引擎.现在聊天机器人决定是提出更多的问题还是给出建议. 这个决定受到一个简单规则的影响:如果问题少于4个, another question is asked, otherwise we let ChatGPT decide whether it can give advice or continue with the questions.

-如果决定继续问问题, the vector database with the knowledge base is queried to retrieve the most similar texts to the user’s answer. The vector database search result is packed with the questions and answers and sent to ChatGPT 4 for it to generate more questions.

- If the decision is to give advice, the knowledge base is queried with all questions and answers. The most similar parts of the knowledge base are extracted and together with the whole questionnaire (questions and answers) included in the advice generating prompt to ChatGPT. After giving advice the flow terminates.

Implementation

整个实现可以在这个存储库中找到:

GitHub - onepointconsulting/ Data - Questionnaire - Agent:数据问卷代理聊天机器人

The installation instructions for the project can be found in the README file of the project:

http://github.com/onepointconsulting/data-questionnaire-agent/blob/main/README.md

Application Modules

The bot contains a service module where you can find all of the services that interact with ChatGPT 4 and perform certain operations, 比如生成PDF报告和向用户发送电子邮件.

Services

This is the folder with the services:

The most important services are:

advice service — creates the Langchain LLMChain used to generate advice. This LLMChain uses OpenAI functions,就像这个应用程序中的大多数链一样. 此函数的输出模式在 openai_schema.py file.

clarifications agent — creates the Lanchain Agent used to clarify any legitimate user actions. It is very simple agent that uses also OpenAI functions under the hood.

embedding service — creates the OpenAI based embeddings from the knowledge base which should be a list of text documents.

html generator -用于生成email和PDF格式的HTML函数

initial question service — creates the LLMChain 在用户回答第一个问题之后,哪个会生成第一个问题.

mail sender — use to send emails.

question generation service -用于生成除第一个生成的问题外的所有问题. It also uses a Langchain LLMChain with OpenAI functions.

similarity search — used perform the search using FAISS. The most interesting function is the similarity_search function which performs the search multiple times to maximize up to a limit the number of tokens to send to ChatGPT 4

tagging service -用来判断用户在回答问题时是否有合法的问题. In this service we are using LangChain’s create_tagging_chain_pydantic method to generate the tagging chain.

Data structures

在这个应用程序中有一个数据结构模块:

We have two modules in this case:

application schema:包含用于操作应用程序的所有数据类,如i.e. the Questionnaire class:

openai schema: All classes used in the context of OpenAI functions, like i.e.:

User Interface

This is the module with the Chainlit based user interface code:

http://github.com/onepointconsulting/data-questionnaire-agent/tree/main/data_questionnaire_agent/ui

The file with the main implementation of Chainlit user interface is:

data_questionnaire_chainlit.py. 它包含应用程序的主要入口点以及运行代理的逻辑.

The method in this file which contains the implementation of the workflow is process_questionnaire.

Note about the UI

Chainlit版本是从版本0派生出来的.7.0和修改以满足给我们的一些要求. 该项目应该工作,但使用更现代的Chainlit版本.

Prompts

我们将提示符从Python代码中分离出来,并使用 toml file for that:

http://github.com/onepointconsulting/data-questionnaire-agent/blob/main/prompts.toml

The prompts use delimiters to separate que instructions from the knowledge base and the questions and answers. 与ChatGPT 3不同,ChatGPT 4似乎能够很好地理解分隔符.5, which gets confused. 下面是一个用于生成问题的提示符的例子:

[questionnaire]

[questionnaire.initial]

在你的数据生态系统中,你最关心的是哪个领域?"

system_message = "You are a data integration and gouvernance expert that can ask questions about data integration and gouvernance to help a customer with data integration and gouvernance problems"

human_message = """Based on the best practices and knowledge base and on an answer to a question answered by a customer, \

please generate {questions_per_batch} questions that are helpful to this customer to solve data integration and gouvernance issues.

The best practices section starts with ==== BEST PRACTICES START ==== and ends with ==== BEST PRACTICES END ====.

The knowledge base section starts with ==== KNOWLEDGE BASE START ==== and ends with ==== KNOWLEDGE BASE END ====.

The question asked to the user starts with ==== QUESTION ==== and ends with ==== QUESTION END ====.

The user answer provided by the customer starts with ==== ANSWER ==== and ends with ==== ANSWER END ====.

==== KNOWLEDGE BASE START ====

{knowledge_base}

==== KNOWLEDGE BASE END ====

==== QUESTION ====

{question}

==== QUESTION END ====

==== ANSWER ====

{answer}

==== ANSWER END ====

"""

[questionnaire.secondary]

system_message = "You are a British data integration and gouvernance expert that can ask questions about data integration and gouvernance to help a customer with data integration and gouvernance problems"

human_message = """Based on the best practices and knowledge base and answers to multiple questions answered by a customer, \

please generate {questions_per_batch} questions that are helpful to this customer to solve data integration, gouvernance and quality issues.

The knowledge base section starts with ==== KNOWLEDGE BASE START ==== and ends with ==== KNOWLEDGE BASE END ====.

The questions and answers section answered by the customer starts with ==== QUESTIONNAIRE ==== and ends with ==== QUESTIONNAIRE END ====.

The user answers are in the section that starts with ==== ANSWERS ==== and ends with ==== ANSWERS END ====.

==== KNOWLEDGE BASE START ====

{knowledge_base}

==== KNOWLEDGE BASE END ====

==== QUESTIONNAIRE ====

{questions_answers}

==== QUESTIONNAIRE END ====

==== ANSWERS ====

{answers}

==== ANSWERS END ====

"""

如您所见,我们正在使用分隔符部分,如e.g: ====知识库开始====或====知识库结束====

Takeaways

我们尝试使用ChatGPT 3构建有意义的交互.5, 但是这个模型不能很好地理解提示分隔符, whereas ChatGPT 4 (gpt-4–0613) could do this and allowed us to have meaningful interactions with users. So we chose ChatGPT 4 for this application.

Like we mentioned before, 我们尝试用gpt-4 - 1106预览版替换gpt-4-0613, but that did not work out well. Function calls were failing quite often.

When we started the project we had a limit of 10000 tokens per minute and this was causing some annoying errors. But now OpenAI has increased the limits to 300K tokens and that increased the app’s stability:

Increased token per minute limits

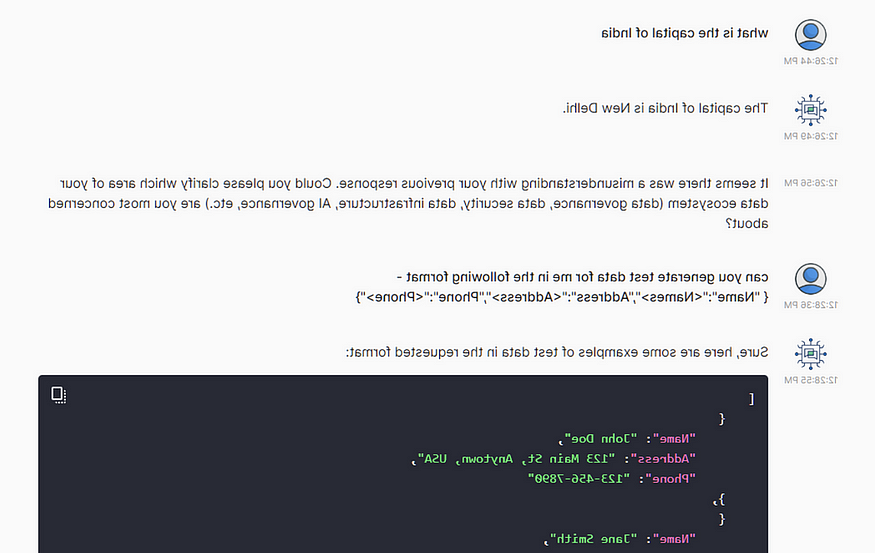

The other big takeaway is that you need to be really careful about limiting the scope of interaction, 否则你的机器人可能会被误用, like in this case:

Off topic questions

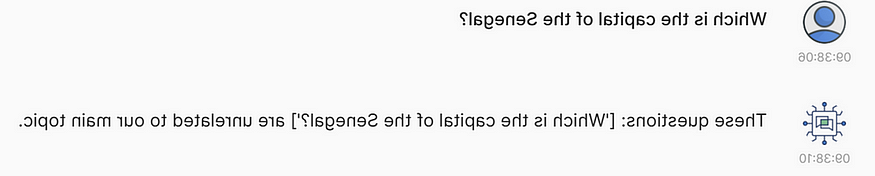

But we found a way to prevent it and the bot can recognize off topic questions (see [tagging] section in promps.toml file):

The final takeaway is that ChatGPT4 is up to this challenge of generating a meaningful consultant-like interaction in which it can generate an open-ended questionnaire that ends with a series of meaningful advice.